Cybersecurity

The New Triad: Integrating AI, Privacy, and Cybersecurity

Artificial intelligence (AI) has the ability to magnify whatever exists in the underlying data—be it the good, the bad, or the ugly. As AI becomes increasingly integral to operations, robust data governance is more crucial than ever before.

While AI has transformed and accelerated our use of data, the foundational principles of data governance are not new. Over the decades, we have refined the lenses of cybersecurity and data privacy, creating a solid foundation to build upon.

As our understanding of the risks posed by new AI tools deepens, industry leaders are calling for the integration of data protection best practices into AI governance. Responding to this need, the National Institute of Standards and Technology (NIST) has started exploring ways to support this goal.

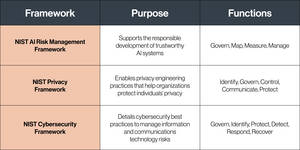

In June 2024, NIST released a concept paper for a Data Governance Management Profile. This framework introduces a new structure while incorporating established best practices from the NIST Triad: the AI Risk Management Framework (AI RMF), the NIST Privacy Framework, and the NIST Cybersecurity Framework (CSF).

Overview of the NIST Data Governance Management Profile (DGM)

In NIST’s concept paper, they note that the DGM emerged from informal stakeholder feedback to the NIST Privacy Engineering Program. Industry leaders wanted resources that could bring together the NIST Privacy Framework, CSF, and the AI RMF under one umbrella. Drawing upon Coalfire’s experience implementing these frameworks for organizations, we can gather that the profile goes beyond the sum of the frameworks’ parts. Its core value-add is its ability to support the alignment of different stakeholders involved in data governance.

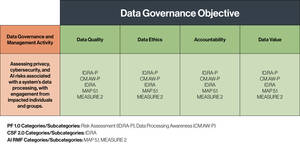

To achieve this, NIST proposes a matrix that maps data governance objectives to specific cross-functional activities. At the heart of the matrix are categories and subcategories from the three frameworks, enabling teams to quickly understand key best practices during each activity.

Data Governance Objectives

NIST’s proposed data governance objectives are:

- Data quality: Ensuring that data assets are accurate, fair, and fit for purpose. Critical challenges arise from data provenance and lineage. For personal data, organizations should carefully track and manage the purposes associated with consent.

- Data ethics: Establishing behavioral norms and standards for responsible data governance. While organizations may establish their own “North Star” values, violating consumer expectations will quickly erode trust.

- Accountability: Ensuring decision-makers accept responsibility for data governance decisions and their impacts. Crucially, the rationale and results should be documented, with analysis encompassing the domains of AI, privacy, and cybersecurity.

- Data value: Maximizing the benefits of data across the enterprise, while managing associated risks. Tensions arise when decisions about data create trade-offs between stakeholders.

Practically speaking, these objectives allow stakeholders to highlight the importance of each factor, propose appropriate metrics, and ultimately ensure data is fit-for-purpose across the organization. For example, metrics for data quality might include accuracy, timeliness, and completeness.

Data Governance Activities

NIST’s initial proposed activities are:

- Establishing data processing policies, processes, and procedures to manage the legal, regulatory, risk, and operational environment in which the organization processes data

- Evaluating the organizational context in which systems are developed and deployed

- Assessing privacy, cybersecurity, and AI risks associated with a system’s data processing, with engagement from impacted individuals and groups

There’s a lot to unpack here, but the emphasis is clear: aligning stakeholders and ensuring everyone is on the same page is paramount for effective data governance.

Natural Evolution of NIST’s Established Frameworks

The DGM represents a natural evolution of NIST’s established frameworks. The NIST Privacy Framework, CSF, and AI RMF were all designed to work in tandem. Recent and planned changes reinforce this harmony. In particular, the major update to NIST CSF 2.0 earlier this year, introducing the Govern function, and the planned iteration of NIST Privacy to realign with updates to the CSF.

What the DGM creates is greater visibility into the dependencies between the frameworks. As Coalfire has noted while supporting the implementation of multiple NIST Frameworks, different teams tend to take ownership of each framework. By explicitly approaching the three frameworks together, the profile offers a blueprint for interdepartmental coordination. This approach ensures that privacy, cybersecurity, and AI risk management are not siloed but integrated into a cohesive strategy.

NIST's characteristic style is evident in this approach. It's non-prescriptive yet provides clear direction, giving organizations the flexibility to tailor the framework to their unique needs. This profile is a significant step towards unifying data governance practices and, in so doing, allowing confidence in the development and deployment of AI tools. It sets a standard that can be widely adopted across industries, creating a stable data governance foundation from which to adapt to evolving challenges in AI, privacy, and cybersecurity.

The Business Imperative of Proactive Data Governance

Often, businesses adopt frameworks because of compliance obligations.

In this instance, the rationale to adopt the DGM comes from more concrete business objectives. First, there is significant business benefit to “doing the right thing” with AI. In a time when data drives value, consumer trust is imperative. Concern about AI is high, and missteps in this field draw disproportionate attention. For example, a scandal involving biased AI decision-making can severely damage a company’s reputation and bottom line.

Second, AI models so far do not have effective mechanisms for “untraining.” Much like a cake that has already been baked, organizations cannot selectively remove ingredients. Training the AI model is by far the highest-cost aspect of the process. Therefore, by putting data governance practices in place and proactively ensuring cross-functional alignment, businesses reduce long-term costs.

Finally, implementing the DGM enables organizational resilience. We have already seen the EU AI Act begin to create ripple effects. Within the US, Biden’s Executive Order on AI has generated federal agency actions, like US Secretary of the Treasury Janet Yellen’s recent call for stakeholder input within the financial sector. Colorado passed comprehensive AI legislation, potentially starting a patchwork of state laws like those for data privacy.

In the context of so many unknowns, proactive businesses would do well to explore the benefits of a unifying framework like the NIST’s proposed DGM. The next draft for public comment will likely be available before the end of the year.

In the meantime, businesses can set themselves up for success by reviewing and implementing the independent NIST frameworks. Support the stakeholders overseeing these processes in interdepartmental roundtables. Their work is interdisciplinary. As NIST has recognized, with AI accelerating exposure of both warts and wisdom alike, a siloed approach is not an option.