Advisory

Anthropic vs. OpenAI: Battle of the Frontier Labs

A Weeklong AI Showdown Reveals the Future of Model Competition

The AI world saw one of its most public rivalries flare up last week as Anthropic and OpenAI exchanged jabs through ads, release drops, and social‑media commentary. While competition between frontier labs is nothing new, the speed and tone of this particular exchange highlight how rapidly the market is maturing—and how critical governance, security, and responsible deployment have become for enterprise teams.

Below is a recap of the week’s events, the key differences between the models each company released, and the security implications that matter for organizations adopting AI and agentic workflows.

How It Started: Anthropic’s "Anti‑Advertising" YouTube Spots

Anthropic ignited the exchange by releasing four satirical commercials on its YouTube channel. Each video highlighted a near‑future where AI systems serve ads, implying that OpenAI’s recent announcements around paid placements and ad‑supported agents were a step in the wrong direction.

Below are the Anthropic commercials for your viewing pleasure:

- “Is my essay making a clear argument?”

- “Can I get a six pack quickly?”

- “What do you think of my business idea?”

- “How can I communicate better with my mom?”

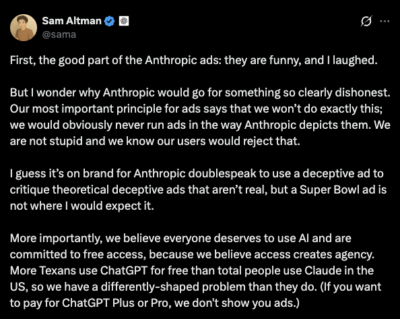

OpenAI Responds on X

OpenAI CEO Sam Altman quickly responded on X, offering a characteristically terse rebuttal

His post was interpreted as a mix of pushback and a declaration that the market—not satire—would determine the future of AI monetization strategies.

Read full tweet here.

The Release Race: Opus 4.6 vs. GPT 5.3 Codex

One day after the ad exchange, Anthropic released its newest frontier model, Claude Opus 4.6. Just twenty minutes later, OpenAI dropped GPT-5.3-Codex.

Release Notes for each model:

- Anthropic Opus 4.6:

https://www.anthropic.com/news/claude-opus-4-6 - OpenAI GPT 5.3 Codex:

https://openai.com/index/introducing-gpt-5-3-codex/

The simultaneous timing did not go unnoticed.

Why This Matters: Security Considerations for Agentic Systems

As companies adopt agentic architectures, the competitive model releases matter—but secure implementation matters more. Both Anthropic and OpenAI emphasize safety, but no foundation model can fully protect an enterprise environment on its own.

Guardrail Gates: A Needed Control Layer

Guardrail Gates act as inspection and control points that enforce security, policy, and operational boundaries across an agent’s lifecycle.

The Three Gate Types are:

• Pre‑Gates: Validate and sanitize inputs (user prompts, retrieved knowledge, tool outputs).

• Mid‑Gates: Govern agent planning and tool use—the highest‑risk phase for real‑world actions.

• Post‑Gates: Inspect model outputs before they reach the user or trigger external systems.

Controls That Guardrail Gates Commonly Enforce

• No leakage of sensitive or regulated data (PII, secrets, PHI, CUI, etc)

• Safe and compliant tool calls (e.g., no destructive commands, bounded API spend)

• Enforced JSON schemas and data integrity constraints

• Classification filtering (toxicity, bias, safety)

• Auditable logs for regulatory frameworks (SOC2, HIPAA, CMMC, PCI)

• Budget controls on cost, time, or number of agent iterations

As organizations scale up AI deployments and start adopting Agentic workflows, guardrails become a foundational requirement—not an optional enhancement.

How Coalfire Helps: Security of AI + Security with AI

At Coalfire, we’re deeply invested in helping organizations transition from early AI experimentation to secure, production‑grade systems. Teams across industries are struggling with two different but related challenges, and we’ve built dedicated services for each.

Security of AI | ForgeAI Services

How does your company securely build and deploy AI systems and agents?

ForgeAI helps organizations implement secure GenAI and agentic architectures with enterprise‑grade security, privacy, and compliance baked in from day one.

Common outcomes include:

- Architectures aligned with emerging AI regulations and governance

- Secure-by-default agent workflows

- Accelerated time to market for AI‑driven capabilities

- Reduced security risk and improved operational resilience

Security with AI | LegionAI Services

How can AI agents improve and scale security and compliance operations?

LegionAI helps teams automate repetitive security workflows, reduce analyst load, and improve both detection and compliance outcomes. Free up your security team’s time by automating low-value tasks so they can spend more time on high-value tasks.

Typical benefits include:

- Lower mean time to detect (MTTD) and fewer recurring control failures

- Automated evidence gathering and reporting for compliance frameworks

- Meaningful cost reduction (often 10–20%) while expanding capability

Final Takeaway

The “Anthropic vs. OpenAI” rivalry is more than social‑media theater—it reflects the accelerating pace of frontier AI advancement. But for enterprises, model performance is only one dimension. The real differentiator is how securely and responsibly those models are implemented within agentic systems.

With the right guardrails—and the right partner—organizations can capture the value of next‑generation AI while staying compliant, resilient, and secure.