AI Governance

A Practical Approach for GenAI and Agentic Security

How to design systems that stay secured from all sides.

We don’t only eat deep dish in Chicago, you know. We don’t only eat “pizza,” either. Tavern-style, thin crust, New York slices, Detroit squares. It’s all “pizza,” but good luck ordering like that.

And yet, that’s exactly how so many AI programs start.

Someone in charge announces that the company’s “going all in on AI,” as if AI were one dish, served the same way everywhere. But generative models and agents come in many forms, even if they all share similar ingredients.

For product leaders and security teams, the pressure is twofold: Deliver these AI features fast, and prove to regulators, auditors, and customers that they can be trusted. That’s much easier said than done with AI—especially when there’s confusion about what, exactly, is being built. One missed control can leak sensitive data into prompts and logs, while a misconfigured agent can trigger destructive actions on a grand scale. And compliance evidence, the lifeblood of regulated industries, can fragment across layers that didn’t even exist a year ago.

This doesn’t make AI dangerous. It just means that companies need to be especially careful with how they implement it. Unfortunately, adoption is leaving security in the dust, moving way faster than the frameworks meant to govern it.

Why AI Doesn’t Fit Old Security Rules

Traditional software is largely deterministic. Give it the same input a thousand times and it’ll give you the same thousand outputs. This predictability has long been great for building security controls. But AI breaks the mold in three ways:

- It introduces uncertainty. AI doesn’t always give the same answer to the same question. Developers can adjust temperature or top_p to make outputs steadier or more varied, but randomness is always part of the design. That’s great for creative prompts, but poses security risks when models are handling tasks like access management for sensitive data.

- It works through tools. AI agents can trigger APIs, run commands, move data across entire enterprise systems. What makes them powerful also makes them risky. A single error or malicious prompt can jeopardize the entire organization and its value chain. If permissions and monitoring layers are too broad or too porous, the system can slip through and keep executing at machine speed.

- It depends on context. Retrieval-augmented generation (RAG) pulls information from relevant records (a company database, knowledge base, index) to improve model responses. That means sensitive data flows through prompts, indexes, logs. Those flows need the same governance and monitoring as any other channel carrying regulated or confidential data.

Security leaders know the broad-strokes risks associated with these characteristics—hallucinations, prompt injection, data leakage. What’s missing is a practical way to map those risks back to the architectures AI runs on. NIST’s AI Risk Management Framework and ISO’s new AI management standard (42001) offer guiding principles, and OWASP’s LLM Top 10 catalogues emerging vulnerabilities. But organizations also need design patterns for how to implement those controls today, in production. This is significantly lacking across the industry.

A System, Not a Box

Another common misconception about AI agents is they aren’t single, static “things,” they’re systems. Dynamic systems. Made up of different layers, all working together.

You’re probably familiar with one layer, the large language model (LLM), which is responsible for reasoning. There are also tools and resources to take action, policies and instructions that shape behavior, memory to carry context, orchestration to manage flow and approvals—just to name a few.

Seeing agents as systems like this matters because it helps leaders assign accountability, scope permissions correctly, and produce the audit evidence stakeholders demand.

It also makes organizations faster. Features clear reviews the first time. Compliance evidence is ready when auditors ask. Customers and partners see that controls are real and testable.

When the architecture isn’t visible, risks will go unnoticed and damage will accumulate. A compliance gap in memory handling may not surface until it’s time to audit. A mis-scoped permission in orchestration can snowball into agent misuse across systems. Will this delay the launch? Lead to costly remediation? Damage brand reputation or shareholder confidence? All of the above is an answer, yes.

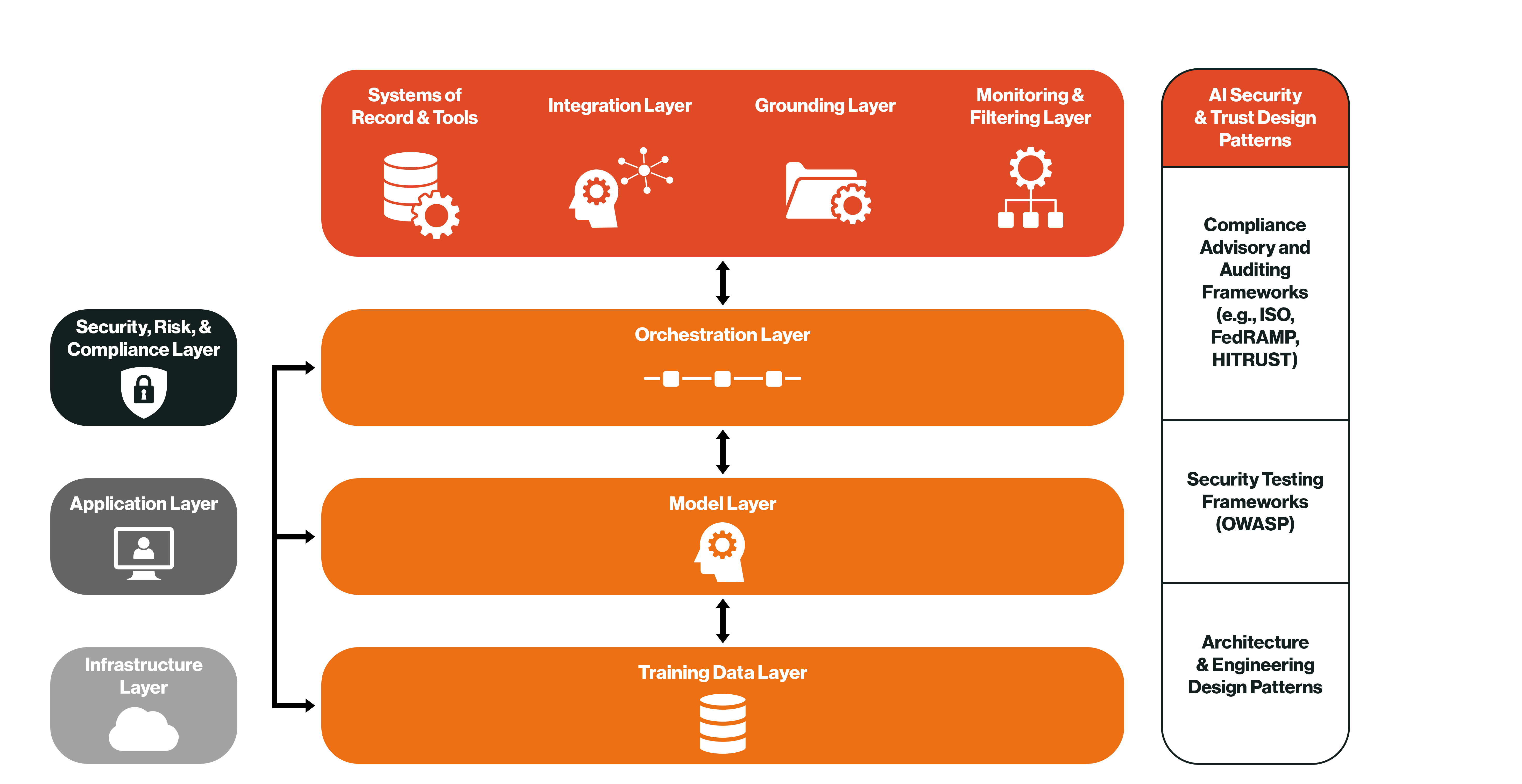

Every Layer, Secured on All Sides

To help organizations avoid these pitfalls, Coalfire developed an approach to AI that spans the full system, taking stock of each layer to show what exists and how to protect it.

1. We start by defining the anatomy of the system in the same terms that the most mature teams across the industry are already using—application/UI, orchestration, training data, model, grounding, and so on. This gives security, product, and enterprise teams a common language for what needs to be protected, what needs to be compliant, and where those responsibilities lie in the system.

2. Then, against each of those layers, we use the Coalfire AI framework to apply a security overlay: questions that help developers understand what to build and security what to test:

- Application: How do we design and code GenAI features so users get value without creating risky pathways (e.g., safe approval flows, clear permissions, explainable outputs)?

- Training data: What data influences models (third-party, in-house)? How do lineage, consent, and licensing affect risk and compliance?

- Model: How do we evaluate and select models for tasks where safety, security, and reliability matter most?

- Orchestration: How do we structure prompts, context, and flow so security and privacy requirements are enforced, not bypassed?

- Grounding (RAG): How do we ensure agents consult the right policies and data at the right time, and avoid sensitive sources when they shouldn’t?

- Tools & integration: How do we ensure agent actions (tools, resources, other agents) adhere to least privilege and separation of duties across protocols like Model Context Protocol (MCP) and Agent-to-agent (A2A)?

- Monitoring: How do we detect, at runtime, whether inputs/outputs comply with policy (content, privacy, IP) and whether attacks are underway?

- Infrastructure: Do networks, containers, and logs reinforce controls instead of undermining them?

3. Finally, we use the framework to connect these safeguards back to compliance. Each question and control is mapped to legacy and emerging standards (NIST AI RMF, ISO 42001, OWASP’s LLM Top 10) so the same work that protects the system also produces the evidence auditors, regulators, and customers will expect. Instead of scrambling to bolt on compliance after the fact, teams can build security into their systems and have audit readiness built in from the start.

Delivering on this framework takes more than a checklist. It takes advisors, testers, and assessors working together—and Coalfire is one of the few firms that brings all three together under one roof.

We know what auditors are looking for because we are certified assessors with decades of experience working with corporate and federal clients. We know where attackers will strike because we are testers. DivisionHex—our risk-tester-threat-hunter division—pressure tests the entire architecture with realistic attack patterns, then helps you address the issues before launch. We know what engineers need because we work alongside them to design and build—from code paths and prompt structures to agent-to-agent (A2A) permissions and cloud guardrails.

And we know it works because we use it ourselves. The same framework that guides our client work underpins Coalfire’s own AI programs. Every safeguard we recommend has been built, tested, and defended in practice. It doesn’t get more practical than that.

The Point

Speed is critical in the AI race. But so is security. The right security solutions don’t slow you down, they make sure that what you ship is secured on all sides. That foresight is the difference between AI experiments that stall and AI systems that scale.