Case Study

What would happen if an attacker cloned your CEO's voice with AI, and called you?

Coalfire put Albert Invent employees to the test.

The Mission: Can AI Voice Cloning Break Albert Invent?

In 2024, Coalfire hackers were approached by Albert Invent, a leading platform for accelerating research and development operations for thousands of scientists in over thirty countries around the world.

Albert Invent’s CEO and co-founder, Nick Talken, posed a question:

How would the team at Albert do if we were targeted by attackers using my voice?

Challenge accepted.

Coalfire hackers dove head-first into research with Albert Invent to understand how effective current open-source tools are at generating fake voice messages.

How could voice messages be used in concert with phishing emails? And would anyone take the bait?

Building the Attack: Realistic, Urgent, and Hard to Ignore

Coalfire's hackers combined their deep expertise and research to cook up a voice clone so convincing it could fool a pro.

The four pillars to a convincing clone are:

1. Quality over everything: If the voice wasn’t crystal clear and believable, the whole scam would fall flat. Minimal distortion was non-negotiable.

2. Keep it short and urgent: Long robotic speeches get caught. So six seconds of pure “drop everything, this matters now” energy.

3. Ambient noise is key: Airport buzz, office chatter, street sounds — whatever made the fake voicemail feel like it was recorded in a real spot.

4. Words matter: Straightforward greeting, quick hit on the real issue, no tech jargon to trip up the mark, and a direct nod to the email they’d get.

A Hacker's Diary: How We Did It

This deep fake breakdown is for legit ops only - to learn the tech and spot the tricks. We don’t condone hacks, scams, or fraud. Stay legal. Stay sharp.

Step 1: Gather Food for the Beast

Scrape Nick’s voice from YouTube videos — pure, unfiltered gold.

Slice it up in Audacity to prep them perfectly for the AI model.

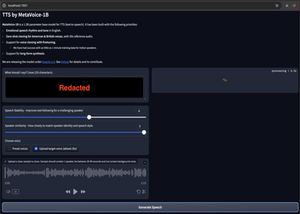

Throw the polished audio into a local instance of MetaVoice-1B to start the cloning magic!

Step 2: Nail Down the Script

Keep it short and sharp (no CEO monologues).

Kick off with a casual greeting, then immediately dive into the urgent issue.

Mention the email they’ll get, and keep the tech talk to a minimum to avoid raising red flags.

Step 3: Tweak Your Heart Out

Spend the time to fine-tune AI settings for maximum believability.

Dial “Speech Stability” to 6, crank “Speaker Similarity” to the max, and play with punctuation to create natural speech patterns.

The result? A hyper-realistic voicemail built in six seconds flat.

Step 4: Find the Angle for Urgency

Pick the perfect pretext from your OSINT intel. Something believable. Like their cloud hosting provider is having an outage.

Find the hook to spark urgency without suspicion.

Step 5: Plot a Calculated Course

Avoid long calls that raise suspicion.

Target after-hours, leave the AI-cloned voicemail, then hit them with the phishing email.

We sent these from aged, legit-looking domains (can’t spill those) with links to a flawless fake cloud login to grab credentials.

Step 6: Spin up some spoofed digits

Boost authenticity by spoofing Nick’s phone number with open-source tools, because why not make it look real?

Now we were ready to launch the attack on nine key individuals in engineering roles.

The Results: From Curiosity to Caution

9 out of 9 opened the email (Curiosity didn’t kill these cats)

2 clicked the link (Bold moves, but not a fatal mistake)

0 handed over credentials (Zero. Zip. Nada.)

Why?

Because Albert’s crew thinks like hackers: always suspicious, endlessly skeptical, and quick to double-check before they pull the trigger.

Their MFA? Locked tight. Least-privilege access? In full effect. Their culture? Straight-up “trust but verify.”

That slick AI-powered attack? Turned into nothing more than a paper tiger.

Albert Invent’s Takeaway

Our mission is to help scientists invent the future faster, but innovation can’t happen without security. If we want to help the world invent faster, we have to defend faster. We brought in Coalfire’s AI team to test our defenses against real-world AI threats. Their attack simulation proved we’re ready. Now, we can push forward confidently, building the future of chemistry with AI.”

Connect with Coalfire and own next-gen AI threats head-on.

Coalfire and Albert Invent are already expanding training to cover deepfake response playbooksand multi-modal simulations, including AI-spoofed video and SMS attacks.

Generative AI is a double-edged sword: a powerful tool for innovation - and - a growing weapon in the hands of cyber adversaries.

If you want to protect your people, data, and future innovations from AI-driven cyber threats, don’t wait until it’s too late.